(Sequel to The paradox of machine learning – what leaders need to know, which dives into a specific story of machine learning for Express Pool at Uber.)

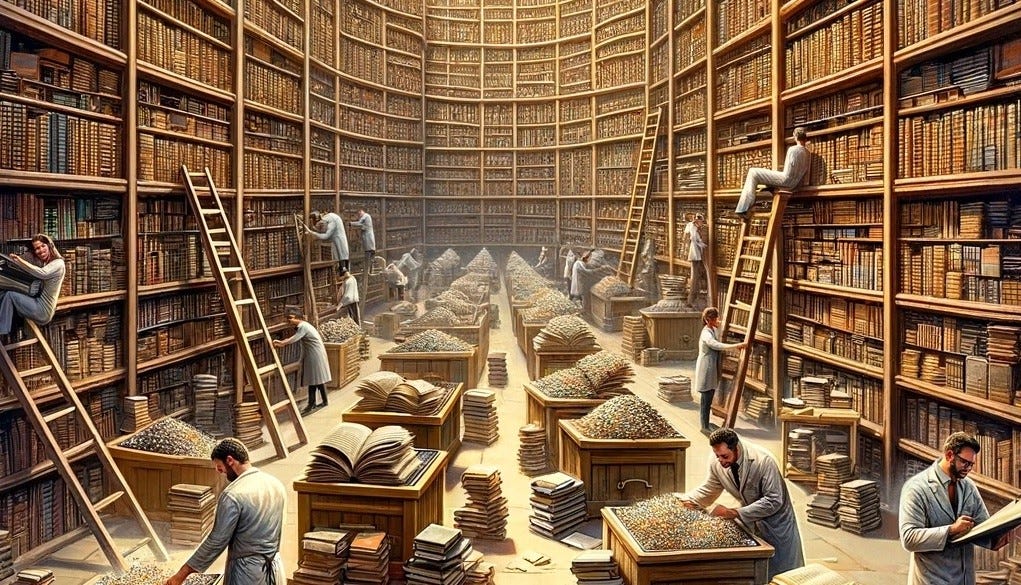

It’s no secret that building machine learning models is hard. But if you aren’t involved in the day-to-day work of ML, you may assume all those expensive data scientists and ML engineers spend their time fine-tuning transformer models and performing PhD-level math.

Here’s the truth: they’re likely spending the majority of their days on painstaking, manual work. Finding data. Understanding data. Cleaning data. Processing data. Getting coffee while something runs. Figuring out how to measure model performance. Training a few models. Rewriting four-thousand line jupyter notebooks into something that can run reliably in production.

We’re not kidding: One Fortune 500 company told us it’s a full year from idea to rollout for any material model improvement. Another said they budget two data engineers for every data scientist.

Even in the most competitive, fast-paced environments, this work is unavoidable — and incredibly important. If you rush this work, or think you can paper over it with fancy statistical snake oil that sounds too good to be true, your machine learning projects will fail.

Let’s take a sneak peek behind the curtain: the six most painstaking steps in data science.

Being ready for these and managing through them effectively can turn years of languish into months of smiles.

This is a meaty one, so buckle up.

1. Finding the right data

What this means

All ML models depend on relevant data, but it’s not always readily apparent what that data is or where to find it. Gathering the data is the first step in data preparation, and it can take weeks or months to compile the required data for a given ML project.

Why it’s hard

Today’s data lakes are littered with hundreds or even thousands of tables. Some are useful, but most are not. Often, these tables have confusing naming conventions — some look like duplicates of others, and it’s unclear who, if anyone, actually understands them. And most companies don’t maintain robust data cataloging solutions to make data discovery easy.

On top of everything else, data is dynamic and constantly changing. Even on a small data team, engineers are constantly building new tables and adding new fields. On any given day, knowledge that was up-to-date last week is no longer relevant.

When we were working on the Uber Express Pool project, data gathering was incredibly challenging. We had at least three major data warehouses to pore over and sift through. Seemingly basic metrics weren’t systematized or consistent — there were many different definitions of rider sessions and multiple ways of counting driver hours. We had to figure out which metrics were accurate; understand how far back we could look for benchmarks; identify which team owned those datasets (did any team own them?) and determine whether we could trust a metric would remain reliable going forward. Our scientists found the right data over the course of months – sometimes going one direction and realizing weeks later that it was the wrong one because a given metric was busted. This work wasn’t high judgment – but required sleuthing in the data, in the code that generated that data, and across the org chart.

Why it’s worth getting right

There’s a common saying that garbage in = garbage out. If you aren’t using the right data to feed your models, you won’t get useful predictions. Full stop.

2. Understanding, reshaping, and cleaning the data

What this means

Once your team has located the right data within your warehouse, they have to query it, shape it into a form they can use, and clean it. That involves identifying data quality issues — like missing data, data that falls outside the expected range, or formatting errors — and understanding whether the data should be dropped, or fixed and incorporated into the model’s dataset.

Why it’s hard

In order to accurately shape and clean the data, your team needs to decipher how it was created. They need to understand what each column means, diagnosing all-too-familiar scenarios like:

How can it be that CANCELLED_AT has a value but IS_CANCELLED = FALSE?

Why are there users with events in session_events_aggregated that aren’t in the user_daily_activity table?

How do we exclude test users?

Why are some rows duplicates? How should these be handled?

Data scientists also need to answer less clear cut questions within the business context surrounding the data and make decisions about how unusual circumstances or edge cases should be handled in the context of the model, like how to handle a product outage that impacts usage data.

As we worked on the Uber Express Pool model, we were furiously trying to benchmark the system to understand how precisely our model could predict sales. We continually ran into questions around both data incidents and the weather. Should we exclude periods where there were tech outages, or big promotional campaigns — both common occurrences at Uber at the time. When there’s a huge snowstorm in the Northeast, do we include that in the data?

Why it’s worth getting right

All else equal, cleaner data yields a better model. However, small variations in data are unavoidable, and it’s important that the data in production has the same distribution as in training, else the model’s output will be significantly compromised. So your team needs to ensure their training and production datasets are consistent and reliable.

3. Processing the data to build features

What this means

Once your team has clean data, they need to build features — the individual input variables the ML model will use to learn patterns and make predictions. Features can be as simple as transforming a time stamp into an hour-of-day integer, or much more complex — like a text embedding that converts a product name into a 768-dimensional vector.

Why it’s hard

While conceptually simple, building meaningful features gets complex very quickly. You have to achieve a balance between engineering features that are both predictive and practical.

Two common issues arise around scale and data leakage.

On scale: as you engineer features, it’s easy to jump from a manageable dataset with a couple of columns and millions of rows to a hundreds of columns and a computational nightmare that crashes your machine 2 hours into a job with an illegible error message.

On leakage: data leakage happens when the features you engineer include information that’s only available retroactively, and not at prediction time – such as using total redeemed coupons to help predict daily sales (this would “leak” data from the future because you won’t know the total redeemed coupons for future dates). Avoiding data leakage requires carefully managing timestamps.

Scale was a major issue with the Express Pool project. We started by just predicting daily trips in a city, but quickly wanted to dive deeper and create features capturing hourly patterns in different neighborhoods (which in Uber-speak were called hex-clusters or hexsters). With hundreds of cities and millions of trips a day, you can see how this exploded. It’s infuriating to have a notebook run for hours only to hit a memory issue and lose the kernel.

Why it’s worth getting right

The features you choose and how you preprocess them are the linchpin to a successful ML model. Effective features are how you tell the ML model about the signal in the data, and they are often the make-or-break difference between an accurate model that generalizes from training data to real-world data, and an inaccurate model that fails to learn the underlying patterns.

4. Deciding what features to include

What this means

In addition to preprocessing data for feature-building, your team will need to identify which features provide the most useful information for predicting outcomes — and decide which to include in the model. This is both technical and strategic work, and usually involves a lot of trial and error as data scientists probe for strong and robust relationships with the target variables.

Why it’s hard

While there’s some statistical research literature on how to do this, in practice, there’s no one-size-fits-all approach or a common, robust solution. This work requires a bespoke approach, every time.

Data scientists have to consider the high-dimensionality of their datasets (how many features could be included, which increases computational costs and the risk of overfitting), how features interact with each other, computational constraints, their performance model evaluation metrics, and how feature relevance could change over time.

Why it’s worth getting right

Feature selection determines your model’s overall performance. If your team misses relevant features, your model’s accuracy suffers. But if they include less relevant or redundant features, your model overfits — and so accuracy also suffers. Striking the right balance is essential to building a model capable of achieving its intended goals and providing actionable predictions.

5. Deciding what good looks like

What this means

Hopefully by this point you’ve already been thinking about what success looks like. But now the rubber meets the road since you need to decide if the model is actually good enough. This means setting benchmarks that reflect the model’s accuracy, reliability, and applicability to real-world scenarios, and making precise decisions about their strategy for cross-validation (which assesses how the model will generalize to an independent dataset) and test sets (the separate portions of your dataset not used during the training phase, but set aside for evaluation).

Why it’s hard

Conceptually, this sounds simple, but it’s quite tricky to execute. How you split your data for training, validation, and testing has a huge impact on how accurately you can gauge your model’s performance. There are many ways to approach this work — will you split your data based on time, users, sessions, or something else altogether? If you’re using time-based splits, are you considering rotating panels to ensure the model’s accuracy over different time frames?

It’s very easy to make mistakes here. You have to mitigate the risk of overfitting to a re-used test set, which often requires creating separate validation sets. And you have to be very precise about the metrics you use, such as accuracy, precision/recall, F1 scores, AUC-ROC, or other metrics. Often, teams need to balance multiple metrics to make decisions, which requires both statistical judgment and a deep understanding of the business context and the trade-offs that different metrics represent.

Why it’s worth getting right

Defining what a high-performing model looks like means aligning performance metrics with business objectives and the specific problem you’re solving. This ensures your model won’t just perform well on paper, but will deliver actionable insights for real-world applications. If you whiff on this one, there wasn’t any point in the first place.

6. Deploying models to production

What this means

Once a model has been developed, tested, and evaluated as successful, it’s time to integrate the model into production environments so it can automatically make decisions or predictions based on live data. This usually involves multiple frontend and backend systems, new data pipelines, and monitoring to ensure the model performs reliably at scale.

Why it’s hard

Transitioning from a controlled environment to a dynamic real-world setting is complex, and integrating the model into your existing infrastructure requires collaboration across teams, including data scientists, data engineers, and software engineers.

These teams aren’t always in lockstep, and breakdowns can happen when:

Data scientists deliver poor quality code that needs to be cleaned up by engineers

Upstream data producers aren’t in the loop on the new dependencies introduced by the ML model

Teams aren’t aligned on priorities around shared infrastructure

Why it’s worth getting right

Even the most brilliantly developed ML model won’t do any good sitting in your data scientist’s notebook. Without getting it into production, ML models are incapable of delivering business value.

Bottom line: know it’s hard, and also know good data science is worth the wait

Machine learning brings the potential for transformative outcomes — good and bad. When done right, it can propel your organization to new heights.

But there’s real work to be done at the intersection of data, code, and the business. There’s no silver bullet on making this instant — the reality is that when any of these key steps are missed or rushed, it can lead to costly mistakes and poor outcomes.

That said, knowing where the stumbling blocks are likely to be and thinking ahead about how to mitigate them makes a big difference.